My Latest Posts

How to Use an External Hard Drive With an Xbox Series X

It is no secret that the 802 GB of usable storage space that the Xbox Series X offers is simply not going to be enough … Read More

How to Partition an External Hard Drive

If you have owned a laptop or a computer before, chances are that you have seen drives labeled C and D. In fact, those two … Read More

How to Use an External Drive With a PS5

If you are amongst the thousands of gamers who have recently purchased one of the best external HDDs for PlayStation 5 to expand the storage … Read More

How to Increase Xbox Series X Storage: Best Series X Expansion Cards

The Xbox Series X provides a fantastic leap forward for console gaming unlike anything ever seen in history. That means more realistic eye-popping graphics, higher … Read More

Best External Hard Drive for PS5 – Our Top Picks & Reviews

The gaming community is buzzing with excitement over the PS5 and for good reason. Some of the many questions people have about the PS5 is … Read More

What’s the Biggest External Hard Drive You Can Get in 2021?

Hard drives are getting bigger and bigger by the month. What used to be mind-blowingly significant years ago (like 1TB drives) is the norm nowadays. … Read More

Hard Drive Information

How to Use an External Hard Drive With an Xbox Series X

It is no secret that the 802 GB of usable storage space that the Xbox Series X offers is simply not going to be enough … Read More

How to Partition an External Hard Drive

If you have owned a laptop or a computer before, chances are that you have seen drives labeled C and D. In fact, those two … Read More

Western Digital HDD Colors Explained

What Do the Western Digital HDD Colors Mean? Western Digital HDD has six colors that include green, purple, blue, gold, and black. What do the … Read More

8 Best External Hard Drive Docking Stations 2021

External hard drive docking stations are one of the most useful accessories anyone can add to their tech arsenal. They can serve many purposes like … Read More

Best 5 Terabyte External Hard Drives 2021

In today’s digital world, keeping track of your data is more important than ever. Many people, however, still choose to store their files exclusively on … Read More

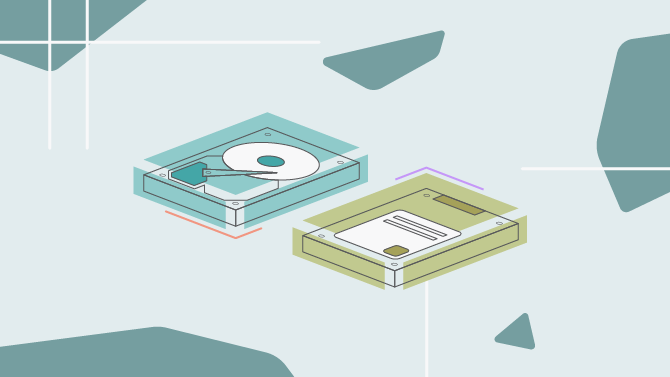

A Guide to Solid-State Drives vs Hard Drives

If you have not built your own computer or bought an external hard drive for a while, you might not have known that you now … Read More

My Most Popular Reviews

What’s the Best External SSD? A Definitive Guide & Review

If you’ve been shopping around for portable storage, you’ve no doubt come across external solid state drives – or SSDs for short. You’ll often see … Read More

The Best External Hard Drive for Gaming 2021 for PC, PlayStation or Xbox

Using an external hard drive for gaming is a great way to create more storage space on your console or PC. This is essential for … Read More

8 of the Best External Hard Drives for Music Production 2021

You do not need computing expertise to understand how important it is to save your work. When it comes to producing music, the role of … Read More

The 10 Best Rugged External HDDs in 2021 Reviewed

It goes without saying that the most important part of any computer is the hard drive. The hard drive stores all your important data, be … Read More

The 9 Best External Hard Drives for Mac 2021 Reviewed

We use Apple products all the time. But sadly, not every product is made to be Mac-compatible. In this guide, we will cover what we … Read More

The Best External Hard Drives for Photographers 2021

Photography is a genuinely fascinating activity, whether done professionally or for fun. The photographer thrives by capturing the best moments on camera, and the worst … Read More